Writing reversible jump MCMC in NIMBLE

Writing reversible jump MCMC samplers in NIMBLE

Introduction

Reversible jump Markov chain Monte Carlo (RJMCMC) is a powerful method for drawing posterior samples over multiple models by jumping between models as part of the sampling. For a simple example that I’ll use below, think about a regression model where we don’t know which explanatory variables to include, so we want to do variable selection. There may be a huge number of possible combinations of variables, so it would be nice to explore the combinations as part of one MCMC run rather than running many different MCMCs on some chosen combinations of variables. To do it in one MCMC, one sets up a model that includes all possible variables and coefficients. Then “removing” a variable from the model is equivalent to setting its coefficient to zero, and “adding” it back into the model requires a valid move to a non-zero coefficient. Reversible jump MCMC methods provide a way to do that.

Reversible jump is different enough from other MCMC situations that packages like WinBUGS, OpenBUGS, JAGS, and Stan don’t do it. An alternative way to set up the problem, which does not involve the technicality of changing model dimension, is to use indicator variables. An indicator variable is either zero or one and is multiplied by another parameter. Thus when the indicator is 0, the parameter that is multipled by 0 is effectively removed from the model. Darren Wilkinson has a nice old blog post on using indicator variables for Bayesian variable selection in BUGS code. The problem with using indicator variables is that they can create a lot of extra MCMC work and the samplers operating on them may not be well designed for their situation.

NIMBLE lets one program model-generic algorithms to use with models written in the BUGS language. The MCMC system works by first making a configuration in R, which can be modified by a user or a program, and then building and compiling the MCMC. The nimbleFunction programming system makes it easy to write new kinds of samplers.

The aim of this blog post is to illustrate how one can write reversible jump MCMC in NIMBLE. A variant of this may be incorporated into a later version of NIMBLE.

Example model

For illustration, I’ll use an extremely simple model: linear regression with two candidate explanatory variables. I’ll assume the first, x1, should definitely be included. But the analyst is not sure about the second, x2, and wants to use reversible jump to include it or exclude it from the model. I won’t deal with the issue of choosing the prior probability that it should be in the model. Instead I’ll just pick a simple choice and stay focused on the reversible jump aspect of the example. The methods below could be applied en masse to large models.

Here I’ll simulate data to use:

N <- 20 x1 <- runif(N, -1, 1) x2 <- runif(N, -1, 1) Y <- rnorm(N, 1.5 + 0.5 * x1, sd = 1)

I’ll take two approaches to implementing RJ sampling. In the first, I’ll use a traditional indicator variable and write the RJMCMC sampler to use it. In the second, I’ll write the RJMCMC sampler to incorporate the prior probability of inclusion for the coefficient it is sampling, so the indicator variable won’t be needed in the model.

First we’ll need nimble:

library(nimble)

RJMCMC implementation 1, with indicator variable included

Here is BUGS code for the first method, with an indicator variable written into the model, and the creation of a NIMBLE model object from it. Note that although RJMCMC technically jumps between models of different dimensions, we still start by creating the largest model so that changes of dimension can occur by setting some parameters to zero (or, in the second method, possibly another fixed value).

simpleCode1 <- nimbleCode({ beta0 ~ dnorm(0, sd = 100) beta1 ~ dnorm(0, sd = 100) beta2 ~ dnorm(0, sd = 100) sigma ~ dunif(0, 100) z2 ~ dbern(0.8) ## indicator variable for including beta2 beta2z2 <- beta2 * z2 for(i in 1:N) { Ypred[i] <- beta0 + beta1 * x1[i] + beta2z2 * x2[i] Y[i] ~ dnorm(Ypred[i], sd = sigma) } }) simpleModel1 <- nimbleModel(simpleCode1, data = list(Y = Y, x1 = x1, x2 = x2), constants = list(N = N), inits = list(beta0 = 0, beta1 = 0, beta2 = 0, sigma = sd(Y), z2 = 1))

Now here are two custom samplers. The first one will sample beta2 only if the indicator variable z2 is 1 (meaning that beta2 is included in the model). It does this by containing a regular random walk sampler but only calling it when the indicator is 1 (we could perhaps set it up to contain any sampler to be used when z2 is 1, but for now it’s a random walk sampler). The second sampler makes reversible jump proposals to move beta2 in and out of the model. When it is out of the model, both beta2 and z2 are set to zero. Since beta2 will be zero every time z2 is zero, we don’t really need beta2z2, but it ensures correct behavior in other cases, like if someone runs default samplers on the model and expects the indicator variable to do its job correctly. For use in reversible jump, z2’s role is really to trigger the prior probability (set to 0.8 in this example) of being in the model.

Don’t worry about the warning message emitted by NIMBLE. They are there because when a nimbleFunction is defined it tries to make sure the user knows anything else that needs to be defined.

RW_sampler_nonzero_indicator <- nimbleFunction( contains = sampler_BASE, setup = function(model, mvSaved, target, control) { regular_RW_sampler <- sampler_RW(model, mvSaved, target = target, control = control$RWcontrol) indicatorNode <- control$indicator }, run = function() { if(model[[indicatorNode]] == 1) regular_RW_sampler$run() }, methods = list( reset = function() {regular_RW_sampler$reset()} ))

## Warning in nf_checkDSLcode(code): For this nimbleFunction to compile, these ## functions must be defined as nimbleFunctions or nimbleFunction methods: ## reset.

RJindicatorSampler <- nimbleFunction( contains = sampler_BASE, setup = function( model, mvSaved, target, control ) { ## target should be the name of the indicator node, 'z2' above ## control should have an element called coef for the name of the corresponding coefficient, 'beta2' above. coefNode <- control$coef scale <- control$scale calcNodes <- model$getDependencies(c(coefNode, target)) }, run = function( ) { ## The reversible-jump updates happen here. currentIndicator <- model[[target]] currentLogProb <- model$getLogProb(calcNodes) if(currentIndicator == 1) { ## propose removing it currentCoef <- model[[coefNode]] logProbReverseProposal <- dnorm(0, currentCoef, sd = scale, log = TRUE) model[[target]] <<- 0 model[[coefNode]] <<- 0 proposalLogProb <- model$calculate(calcNodes) log_accept_prob <- proposalLogProb - currentLogProb + logProbReverseProposal } else { ## propose adding it proposalCoef <- rnorm(1, 0, sd = scale) model[[target]] <<- 1 model[[coefNode]] <<- proposalCoef logProbForwardProposal <- dnorm(0, proposalCoef, sd = scale, log = TRUE) proposalLogProb <- model$calculate(calcNodes) log_accept_prob <- proposalLogProb - currentLogProb - logProbForwardProposal } accept <- decide(log_accept_prob) if(accept) { copy(from = model, to = mvSaved, row = 1, nodes = calcNodes, logProb = TRUE) } else { copy(from = mvSaved, to = model, row = 1, nodes = calcNodes, logProb = TRUE) } }, methods = list(reset = function() { }) )

Now we’ll set up and run the samplers:

mcmcConf1 <- configureMCMC(simpleModel1) mcmcConf1$removeSamplers('z2') mcmcConf1$addSampler(target = 'z2', type = RJindicatorSampler, control = list(scale = 1, coef = 'beta2')) mcmcConf1$removeSamplers('beta2') mcmcConf1$addSampler(target = 'beta2', type = 'RW_sampler_nonzero_indicator', control = list(indicator = 'z2', RWcontrol = list(adaptive = TRUE, adaptInterval = 100, scale = 1, log = FALSE, reflective = FALSE))) mcmc1 <- buildMCMC(mcmcConf1) compiled1 <- compileNimble(simpleModel1, mcmc1) compiled1$mcmc1$run(10000)

## |-------------|-------------|-------------|-------------| ## |-------------------------------------------------------|

## NULL

samples1 <- as.matrix(compiled1$mcmc1$mvSamples)

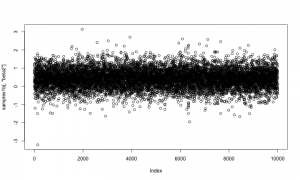

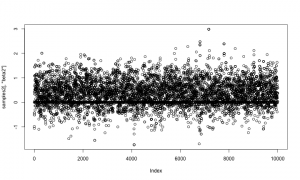

Here is a trace plot of the beta2 (slope) samples. The thick line at zero corresponds to having beta2 removed from the model.

plot(samples1[,'beta2'])

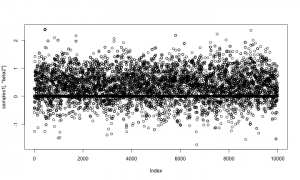

And here is a trace plot of the z2 (indicator variable) samples.

plot(samples1[,'z2'])

The chains look reasonable.

As a quick check of reasonableness, let’s compare the beta2 samples to what we’d get if it was always included in the model. I’ll do that by setting up default samplers and then removing the sampler for z2 (and z2 should be 1).

mcmcConf1b <- configureMCMC(simpleModel1) mcmcConf1b$removeSamplers('z2') mcmc1b <- buildMCMC(mcmcConf1b) compiled1b <- compileNimble(simpleModel1, mcmc1b) compiled1b$mcmc1b$run(10000)

## |-------------|-------------|-------------|-------------| ## |-------------------------------------------------------|

## NULL

samples1b <- as.matrix(compiled1b$mcmc1b$mvSamples) plot(samples1b[,'beta2'])

That looks correct, in the sense that the distribution of beta2 given that it’s in the model (using reversible jump) should match the distribution of beta2 when it is

always in the model.

RJ implementation 2, without indicator variables

Now I’ll set up the second version of the model and samplers. I won’t include the indicator variable in the model but will instead include the prior probability for inclusion in the sampler. One added bit of generality is that being “out of the model” will be defined as taking some fixedValue, to be provided, which will typically but not necessarily be zero. These functions are very similar to the ones above.

Here is the code to define and build a model without the indicator variable:

simpleCode2 <- nimbleCode({ beta0 ~ dnorm(0, sd = 100) beta1 ~ dnorm(0, sd = 100) beta2 ~ dnorm(0, sd = 100) sigma ~ dunif(0, 100) for(i in 1:N) { Ypred[i] <- beta0 + beta1 * x1[i] + beta2 * x2[i] Y[i] ~ dnorm(Ypred[i], sd = sigma) } }) simpleModel2 <- nimbleModel(simpleCode2, data = list(Y = Y, x1 = x1, x2 = x2), constants = list(N = N), inits = list(beta0 = 0, beta1 = 0, beta2 = 0, sigma = sd(Y)))

And here are the samplers (again, ignore the warning):

RW_sampler_nonzero <- nimbleFunction( ## "nonzero" is a misnomer because it can check whether it sits at any fixedValue, not just 0 contains = sampler_BASE, setup = function(model, mvSaved, target, control) { regular_RW_sampler <- sampler_RW(model, mvSaved, target = target, control = control$RWcontrol) fixedValue <- control$fixedValue }, run = function() { ## Now there is no indicator variable, so check if the target node is exactly ## equal to the fixedValue representing "not in the model". if(model[[target]] != fixedValue) regular_RW_sampler$run() }, methods = list( reset = function() {regular_RW_sampler$reset()} ))

## Warning in nf_checkDSLcode(code): For this nimbleFunction to compile, these ## functions must be defined as nimbleFunctions or nimbleFunction methods: ## reset.

RJsampler <- nimbleFunction( contains = sampler_BASE, setup = function( model, mvSaved, target, control ) { ## target should be a coefficient to be set to a fixed value (usually zero) or not ## control should have an element called fixedValue (usually 0), ## a scale for jumps to and from the fixedValue, ## and a prior prob of taking its fixedValue fixedValue <- control$fixedValue scale <- control$scale ## The control list contains the prior probability of inclusion, and we can pre-calculate ## this log ratio because it's what we'll need later. logRatioProbFixedOverProbNotFixed <- log(control$prior) - log(1-control$prior) calcNodes <- model$getDependencies(target) }, run = function( ) { ## The reversible-jump moves happen here currentValue <- model[[target]] currentLogProb <- model$getLogProb(calcNodes) if(currentValue != fixedValue) { ## There is no indicator variable, so check if current value matches fixedValue ## propose removing it (setting it to fixedValue) logProbReverseProposal <- dnorm(fixedValue, currentValue, sd = scale, log = TRUE) model[[target]] <<- fixedValue proposalLogProb <- model$calculate(calcNodes) log_accept_prob <- proposalLogProb - currentLogProb - logRatioProbFixedOverProbNotFixed + logProbReverseProposal } else { ## propose adding it proposalValue <- rnorm(1, fixedValue, sd = scale) model[[target]] <<- proposalValue logProbForwardProposal <- dnorm(fixedValue, proposalValue, sd = scale, log = TRUE) proposalLogProb <- model$calculate(calcNodes) log_accept_prob <- proposalLogProb - currentLogProb + logRatioProbFixedOverProbNotFixed - logProbForwardProposal } accept <- decide(log_accept_prob) if(accept) { copy(from = model, to = mvSaved, row = 1, nodes = calcNodes, logProb = TRUE) } else { copy(from = mvSaved, to = model, row = 1, nodes = calcNodes, logProb = TRUE) } }, methods = list(reset = function() { }) )

Now let’s set up and use the samplers

mcmcConf2 <- configureMCMC(simpleModel2) mcmcConf2$removeSamplers('beta2') mcmcConf2$addSampler(target = 'beta2', type = 'RJsampler', control = list(fixedValue = 0, prior = 0.8, scale = 1)) mcmcConf2$addSampler(target = 'beta2', type = 'RW_sampler_nonzero', control = list(fixedValue = 0, RWcontrol = list(adaptive = TRUE, adaptInterval = 100, scale = 1, log = FALSE, reflective = FALSE))) mcmc2 <- buildMCMC(mcmcConf2) compiled2 <- compileNimble(simpleModel2, mcmc2) compiled2$mcmc2$run(10000)

## |-------------|-------------|-------------|-------------| ## |-------------------------------------------------------|

## NULL

samples2 <- as.matrix(compiled2$mcmc2$mvSamples)

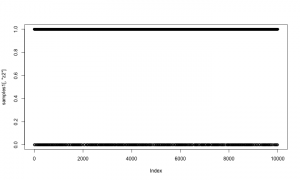

And again let’s look at the samples. As above, the horizontal line at 0 represents having beta2 removed from the model.

plot(samples2[,'beta2'])

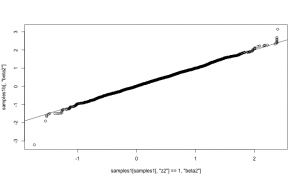

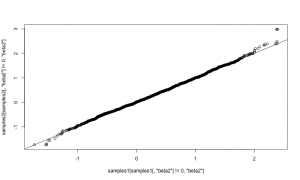

Now let’s compare those results to results from the first method, above. They should match.

mean(samples1[,'beta2']==0)

## [1] 0.12

mean(samples2[,'beta2']==0)

## [1] 0.1173

qqplot(samples1[ samples1[,'beta2'] != 0,'beta2'], samples2[samples2[,'beta2'] != 0,'beta2']) abline(0,1)

They match well. The samplers above could be assigned to arbitrary nodes in a model. The only additional code would arise from adding more samplers to an MCMC configuration. It would also be possible to refine the reversible-jump step to adapt the scale of its jumps in order to achieve better mixing. For example, one could try this method by Ehlers and Brooks. We’re interested in hearing from you if you plan to try using RJMCMC on your own models.

How to apply this for larger models.

En plus des crises existantes, le monde occidental a été frappé par une pénurie de médicaments. Et nous ne parlons pas principalement de la production de quelques molécules à forte intensité de main-d’œuvre, mais des médicaments fortlapersonne de base qui devraient se trouver dans chaque armoire à pharmacie, comme le paracétamol et les antibiotiques, dont la pénurie peut avoir un impact important sur la santé de la nation.

NIMBLE is hiring a programmer

This position includes work to harness parallel processing and automatic differentiation, to generate interfaces with other languages such as Python, to improve NIMBLE’s scope and efficiency for large statistical models, and to build other new features into NIMBLE.

The work will involve programming in R and C++, primarily designing and implementing software involving automated generation of C++ code for class and function definitions, parallel computing, use of external libraries for automatic differentiation and linear algebra, statistical algorithms and related problems. The position will also involve writing documentation and following good open-source software practices.

See here to apply.

Building Particle Filters and Particle MCMC in NIMBLE

This example shows how to construct and conduct inference on a state space model using particle filtering algorithms. nimble currently has versions of the bootstrap filter, the auxiliary particle filter, the ensemble Kalman filter, and the Liu and West filter implemented. Additionally, particle MCMC samplers are available and can be specified for both univariate and multivariate parameters.

Model Creation

Assume is the latent state and

is the observation at time

for

. We define our state space model as

with initial states

and prior distributions

where denotes a normal distribution with mean

and standard deviation

, and

is a shifted, scaled

-distribution with center parameter

, scale parameter

, and

degrees of freedom.

We specify and build our state space model below, using time points:

## load the nimble library and set seed library('nimble') set.seed(1) ## define the model stateSpaceCode <- nimbleCode({ a ~ dunif(-0.9999, 0.9999) b ~ dnorm(0, sd = 1000) sigPN ~ dunif(1e-04, 1) sigOE ~ dunif(1e-04, 1) x[1] ~ dnorm(b/(1 - a), sd = sigPN/sqrt((1-a*a))) y[1] ~ dt(mu = x[1], sigma = sigOE, df = 5) for (i in 2:t) { x[i] ~ dnorm(a * x[i - 1] + b, sd = sigPN) y[i] ~ dt(mu = x[i], sigma = sigOE, df = 5) } }) ## define data, constants, and initial values data <- list( y = c(0.213, 1.025, 0.314, 0.521, 0.895, 1.74, 0.078, 0.474, 0.656, 0.802) ) constants <- list( t = 10 ) inits <- list( a = 0, b = .5, sigPN = .1, sigOE = .05 ) ## build the model stateSpaceModel <- nimbleModel(stateSpaceCode, data = data, constants = constants, inits = inits, check = FALSE)

## defining model...

## building model...

## setting data and initial values...

## running calculate on model (any error reports that follow may simply ## reflect missing values in model variables) ...

##

## checking model sizes and dimensions...

## note that missing values (NAs) or non-finite values were found in model ## variables: x, lifted_a_times_x_oBi_minus_1_cB_plus_b. This is not an error, ## but some or all variables may need to be initialized for certain algorithms ## to operate properly.

##

## model building finished.

Construct and run a bootstrap filter

We next construct a bootstrap filter to conduct inference on the latent states of our state space model. Note that the bootstrap filter, along with the auxiliary particle filter and the ensemble Kalman filter, treat the top-level parameters a, b, sigPN, and sigOE as fixed. Therefore, the bootstrap filter below will proceed as though a = 0, b = .5, sigPN = .1, and sigOE = .05, which are the initial values that were assigned to the top-level parameters.

The bootstrap filter takes as arguments the name of the model and the name of the latent state variable within the model. The filter can also take a control list that can be used to fine-tune the algorithm’s configuration.

## build bootstrap filter and compile model and filter bootstrapFilter <- buildBootstrapFilter(stateSpaceModel, nodes = 'x') compiledList <- compileNimble(stateSpaceModel, bootstrapFilter)

## compiling... this may take a minute. Use 'showCompilerOutput = TRUE' to see C++ compiler details.

## compilation finished.

## run compiled filter with 10,000 particles. ## note that the bootstrap filter returns an estimate of the log-likelihood of the model. compiledList$bootstrapFilter$run(10000)

## [1] -28.13009

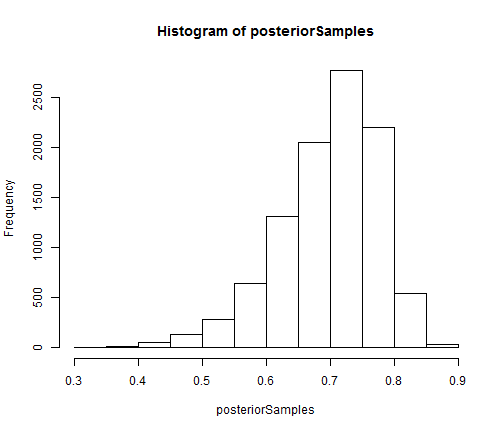

Particle filtering algorithms in nimble store weighted samples of the filtering distribution of the latent states in the mvSamples modelValues object. Equally weighted samples are stored in the mvEWSamples object. By default, nimble only stores samples from the final time point.

## extract equally weighted posterior samples of x[10] and create a histogram posteriorSamples <- as.matrix(compiledList$bootstrapFilter$mvEWSamples) hist(posteriorSamples)

The auxiliary particle filter and ensemble Kalman filter can be constructed and run in the same manner as the bootstrap filter.

Conduct inference on top-level parameters using particle MCMC

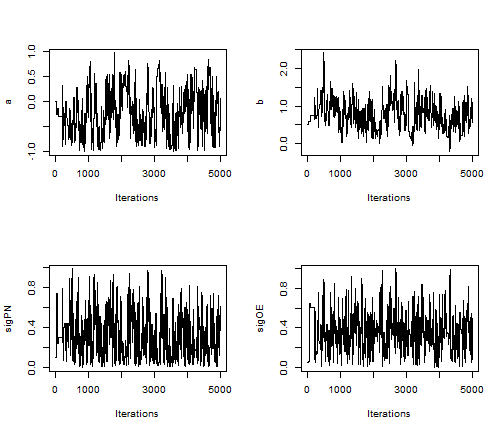

Particle MCMC can be used to conduct inference on the posterior distribution of both the latent states and any top-level parameters of interest in a state space model. The particle marginal Metropolis-Hastings sampler can be specified to jointly sample the a, b, sigPN, and sigOE top level parameters within nimble‘s MCMC framework as follows:

## create MCMC specification for the state space model stateSpaceMCMCconf <- configureMCMC(stateSpaceModel, nodes = NULL) ## add a block pMCMC sampler for a, b, sigPN, and sigOE stateSpaceMCMCconf$addSampler(target = c('a', 'b', 'sigPN', 'sigOE'), type = 'RW_PF_block', control = list(latents = 'x')) ## build and compile pMCMC sampler stateSpaceMCMC <- buildMCMC(stateSpaceMCMCconf) compiledList <- compileNimble(stateSpaceModel, stateSpaceMCMC, resetFunctions = TRUE)

## compiling... this may take a minute. Use 'showCompilerOutput = TRUE' to see C++ compiler details.

## compilation finished.

## run compiled sampler for 5000 iterations compiledList$stateSpaceMCMC$run(5000)

## |-------------|-------------|-------------|-------------| ## |-------------------------------------------------------|

## NULL

## create trace plots for each parameter library('coda')

par(mfrow = c(2,2)) posteriorSamps <- as.mcmc(as.matrix(compiledList$stateSpaceMCMC$mvSamples)) traceplot(posteriorSamps[,'a'], ylab = 'a') traceplot(posteriorSamps[,'b'], ylab = 'b') traceplot(posteriorSamps[,'sigPN'], ylab = 'sigPN') traceplot(posteriorSamps[,'sigOE'], ylab = 'sigOE')

The above RW_PF_block sampler uses a multivariate normal proposal distribution to sample vectors of top-level parameters. To sample a scalar top-level parameter, use the RW_PF sampler instead.

Version 0.6-3 released.

Version 0.6-3 is a very minor release primarily intended to address some CRAN packaging issues that do not affect users. We also fixed a bug involving MCEM functionality and a bug that prevented use of the sd() and var() functions in BUGS code.

For most users, there is probably no need to upgrade from version 0.6-2.

Version 0.6-2 released!

Version 0.6-2 is a minor release with a variety of useful functionality for users.

Changes as of Version 0.6-2 include:

- user-defined distributions can be used in BUGS code without needing to call the registerDistributions() function (unless one wants to specify alternative parameterizations, distribution range or that the distribution is discrete),

- users can now specify the use of conjugate (Gibbs) samplers for nodes in a model,

- NIMBLE will now check the run code of nimbleFunctions for functions (in particular R functions) that are not part of the DSL and will not compile,

- added getBound() functionality to find the lower and upper bounds of a node either from R or in DSL code,

- added functionality to get distributional information about a node in a model or information about a distribution based on the name of the density function; these may be useful in setup code for algorithms,

- multinomial and categorical distributions now allow ‘probs’ arguments that do not sum to one (these will be internally normalized) and

- a variety of bug fixes.

Please see the NEWS file in the installed package for more details.

NIMBLE package for hierarchical modeling (MCMC and more) faster and more flexible in version 0.6-1

NIMBLE version 0.6-1 has been released on CRAN and at r-nimble.org.

NIMBLE is a system that allows you to:

- Write general hierarchical statistical models in BUGS code and create a corresponding model object to use in R.

- Build Markov chain Monte Carlo (MCMC), particle filters, Monte Carlo Expectation Maximization (MCEM), or write generic algorithms that can be applied to any model.

- Compile models and algorithms via problem-specific generated C++ that NIMBLE interfaces to R for you.

Most people associate BUGS with MCMC, but NIMBLE is about much more than that. It implements and extends the BUGS language as a flexible system for model declaration and lets you do what you want with the resulting models. Some of the cool things you can do with NIMBLE include:

- Extend BUGS with functions and distributions you write in R as nimbleFunctions, which will be automatically turned into C++ and compiled into your model.

- Program with models written in BUGS code: get and set values of variables, control model calculations, simulate new values, use different data sets in the same model, and more.

- Write your own MCMC samplers as nimbleFunctions and use them in combination with NIMBLE’s samplers.

- Write functions that use MCMC as one step of a larger algorithm.

- Use standard particle filter methods or write your own.

- Combine particle filters with MCMC as Particle MCMC methods.

- Write other kinds of model-generic algorithms as nimbleFunctions.

- Compile a subset of R’s math syntax to C++ automatically, without writing any C++ yourself.

Some early versions of NIMBLE were not on CRAN because NIMBLE’s system for on-the-fly compilation via generating and compiling C++ from R required some extra work for CRAN packaging, but now it’s there. Compared to earlier versions, the new version is faster and more flexible in a lot of ways. Building and compiling models and algorithms could sometimes get bogged down for large models, so we streamlined those steps quite a lot. We’ve generally increased the efficiency of C++ generated by the NIMBLE compiler. We’ve added functionality to what can be compiled to C++ from nimbleFunctions. And we’ve added a bunch of better error-trapping and informative messages, although there is still a good way to go on that. Give us a holler on the nimble-users list if you run into questions.

Version 0.5-1 of NIMBLE released!

Version 0.5-1 is officially a minor release, but it actually has quite a bit in it, in particular the addition/improvement of a number of our algorithms. In addition there are some more improvements in our speed in building and compiling models and algorithms.

Changes as of Version 0.5-1 include:

- the addition of a variety of sequential Monte Carlo (aka particle filtering) algorithms, including particle MCMC samplers for use within an MCMC,

- a greatly improved MCEM algorithm with an automated convergence and stopping criterion,

- new syntax for declaring multivariate variables in the NIMBLE DSL, namely numeric(), integer(), matrix(), and array(), with declare() now deprecated,

- addition of the multivariate-t distribution for use in BUGS and DSL code,

- a new binary MCMC sampler for discrete 0/1 nodes,

- addition of functionality to our random walk sampler to allow sampling on the log scale and use of reflection,

- more flexible use of forwardsolve(), backsolve(), and solve(), including use in BUGS code, and

- a variety of other items.

Please see the NEWS file in the source package.

NIMBLE: A new way to do MCMC (and more) from BUGS code in R

Yesterday we released version 0.5 of NIMBLE on our web site, r-nimble.org. (We’ll get it onto CRAN soon, but it has some special needs to work out.) NIMBLE tries to fill a gap in what R programmers and analysts can do with general hierarchical models. Packages like WinBUGS, OpenBUGS, JAGS and Stan provide a language for writing a model flexibly, and then they provide one flavor of MCMC. These have been workhorses of the Bayesian revolution, but they don’t provide much control over how the MCMC works (what samplers are used) or let one do anything else with the model (though Stan provides some additional fitting methods).

The idea of NIMBLE has been to provide a layer of programmability for algorithms that use models written in BUGS. We adopted BUGS as a model declaration language because these is so much BUGS code out there and so many books that use BUGS for teaching Bayesian statistics. Our implementation processes BUGS code in R and creates a model object that you can program with. For MCMC, we provide a default set of samplers, but these choices can be modified. It is easy to write your own sampler and add it to the MCMC. And it is easy to add new distributions and functions for use in BUGS code, something that hasn’t been possible (in any easy way) before. These features can allow big gains in MCMC efficiency.

MCMCs are heavily computational, so NIMBLE includes a compiler that generates C++ specific to a model and algorithm (MCMC samplers or otherwise), compiles it, loads it into R and gives you an interface to it. To be able to compile an algorithm, you need to write it as a nimbleFunction rather than a regular R function. nimbleFunctions can interact with model objects, and they can use a subset of R for math and flow-control. Among other things, the NIMBLE compiler automatically generates code for the Eigen C++ linear algebra library and manages all the necessary interfaces.

Actually, NIMBLE is not specific to MCMC or to Bayesian methods. You can write other algorithms to use whatever model you write in BUGS code. Here’s one simple example: in the past if you wanted to do a simulation study for a model written in BUGS code, you had to re-write the model in R just to simulate from it. With NIMBLE you can simulate from the model as written in BUGS and have complete control over what parts of the model you use. You can also query the model about how nodes are related so that you can make an algorithm adapt to what it finds in a model. We have a set of sequential Monte Carlo (particle filter) methods in development that we’ll release soon. But the idea is that NIMBLE provides a platform for others to develop and disseminate model-generic algorithms.

NIMBLE also extends BUGS in a bunch of ways that I won’t go into here. And it has one major limitation right now: it doesn’t handle models with stochastic indices, like latent class membership models.

Here is a toy example of what it looks like to set up and run an MCMC using NIMBLE.

library(nimble) myBUGScode <- nimbleCode({ mu ~ dnorm(0, sd = 100) ## uninformative prior sigma ~ dunif(0, 100) for(i in 1:10) y[i] ~ dnorm(mu, sd = sigma) }) myModel <- nimbleModel(myBUGScode)

myData <- rnorm(10, mean = 2, sd = 5) myModel$setData(list(y = myData)) myModel$setInits(list(mu = 0, sigma = 1)) myMCMC <- buildMCMC(myModel) compiled <- compileNimble(myModel, myMCMC) compiled$myMCMC$run(10000)

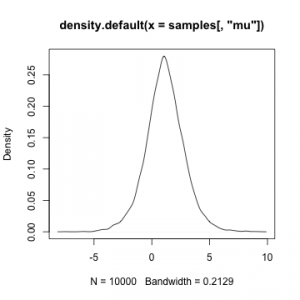

samples <- as.matrix(compiled$myMCMC$mvSamples) plot(density(samples[,'mu']))

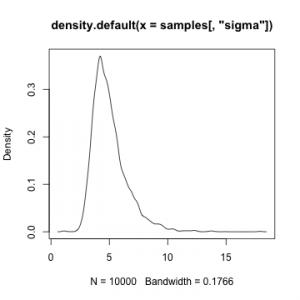

plot(density(samples[,'sigma']))

NIMBLE paper in Journal of Computational and Graphical Statistics

Our paper giving an overview on the rationale and design of NIMBLE has appeared online in accepted manuscript form at the Journal of Computational and Graphical Statistics. You can get it here.

Version 0.5 released!

We’ve just released the next major version of NIMBLE.

Changes include

- more efficient computations for conjugate sampling,

- additional automated checking of BUGS syntax to improve NIMBLE’s warning/error messages,

- new API functionality to allow the use of syntax such as model$calculate(), etc. (syntax such as calculate(model) still works),

- new API functionality for MCMC sampler specification,

- improvements in speed and memory use in building models,

- addition of forwardsolve, backsolve, and solve to the NIMBLE DSL, and

- a variety of other items.

More details in the NEWS file that accompanies the package.

We anticipate being on CRAN in coming weeks and a next release soon that will include a full suite of sequential Monte Carlo (i.e., particle filtering) algorithms.